Mathematica: Optimizing A Raytrace Code: Jon Harrop vs Xah Lee

Recently, in a discussion in newsgroup “comp.lang.python”, Dr Jon Harrop challenged me for a Mathematica optimization problem. In summary, he posted a Mathematica code in which he badmouths Mathematica. I challenged for him to pay me $5 paypal and i'll post code to show how lousy his code is. Then, someone named Thomas M Hermann offered me $20. This page discusses the code.

Here's the full thread:

- Newsgroups: comp.lang.lisp, comp.lang.functional,

- comp.lang.perl.misc, comp.lang.python, comp.lang.java.programmer

- From: Xah Lee

- Date: Sun, 30 Nov 2008

- Subject: Mathematica 7 compares to other languages

- Source groups.google.com

In the following, i'll just post the technical details of this optimization problem.

Optimizing A Raytrace Code

Here's Jon's original code, to be run in Mathematica 6 (notebook: raytrace_v6_original.nb):

delta = Sqrt[$MachineEpsilon]; RaySphere[o_, d_, c_, r_] := Block[{v, b, disc, t1, t2}, v = c - o; b = v.d; disc = Sqrt[b^2 - v.v + r^2]; t2 = b + disc; If[Im[disc] ≠ 0 || t2 ≤ 0, ∞, t1 = b - disc; If[t1 > 0, t1, t2]]] Intersect[o_, d_][{lambda_, n_}, Sphere[c_, r_]] := Block[{lambda2 = RaySphere[o, d, c, r]}, If[lambda2 ≥ lambda, {lambda, n}, {lambda2, Normalize[o + lambda2 d - c]}]] Intersect[o_, d_][{lambda_, n_}, Bound[c_, r_, s_]] := Block[{lambda2 = RaySphere[o, d, c, r]}, If[lambda2 ≥ lambda, {lambda, n}, Fold[Intersect[o, d], {lambda, n}, s]]] neglight = N@Normalize[{1, 3, -2}]; nohit = {∞, {0, 0, 0}}; RayTrace[o_, d_, scene_] := Block[{lambda, n, g, p}, {lambda, n} = Intersect[o, d][nohit, scene]; If[lambda == ∞, 0, g = n.neglight; If[g ≤ 0, 0, {lambda, n} = Intersect[o + lambda d + delta n, neglight][nohit, scene]; If[lambda < ∞, 0, g]]]] Create[level_, c_, r_] := Block[{obj = Sphere[c, r]}, If[level == 1, obj, Block[{a = 3*r/Sqrt[12], Aux}, Aux[x1_, z1_] := Create[level - 1, c + {x1, a, z1}, 0.5 r]; Bound[c, 3 r, {obj, Aux[-a, -a], Aux[a, -a], Aux[-a, a], Aux[a, a]}]]]] scene = Create[1, {0, -1, 4}, 1]; Main[level_, n_, ss_] := Block[{scene = Create[level, {0, -1, 4}, 1]}, Table[Sum[ RayTrace[{0, 0, 0}, N@Normalize[{(x + s/ss/ss)/n - 1/2, (y + Mod[s, ss]/ss)/n - 1/2, 1}], scene], {s, 0, ss^2 - 1}]/ss^2, {y, 0, n - 1}, {x, 0, n - 1}]] AbsoluteTiming[Export["image.pgm", Graphics@Raster@Main[9, 512, 4]]]

Since my version of Mathematica is v4, so i have to make a few changes:

AbsoluteTimingis not available in v4.Timingis used instead.Normalizeis not available in v4. I have to code my own. (for detailed explanation on the code, see: Code Fun: Normalize a Vector of Any Dimension.)

Here's the modified reference implementation in v4: raytrace_v4_reference_imp.nb

Here's my optimized version: raytrace_v4_Xah_Lee.nb.

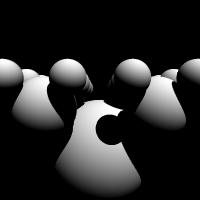

Main[3, 200, 3.]. (converted to png format)Analysis and Explanation

If we rate Jon's Mathematica code on the level of: Beginner, Intermediate, Advanced, where Beginner is someone who had less than than 6 months of coding Mathematica, then that piece of code is Beginner level.

Here's some basic analysis and explanation.

The program has these main functions:

RaySphereIntersectRayTraceCreateMain

The Main calls Create then feeds its output to RayTrace.

Create calls itself recursively, and basically returns a long list of a repeating element, each of the element differ in their parameter.

RayTrace calls Intersect 2 times. Intersect has 2 forms, one of them calls itself recursively. Both forms calls RaySphere once.

So, the core loop is with the Intersect function and RaySphere. Some 99.99% of time are spent there.

The Main[] function calls Create. The create has 3 parameters: level, c, r. The level is a integer for the number of spheres in the raytraced image. The c is a vector for sphere center. The r is radius of the sphere. His input has c and r are integers, and this in Mathematica means computation with exact arithmetics (and automatic kicks into infinite precision if necessary). Changing c and r to float immediately reduced the timing to 0.3 of original.

What a incredible sloppiness! and he intended to use this code in a language speed comparison?

Now, back to the core loop. The RaySphere function contain code calls Im, which is the imaginary part of a complex number!! and if so, it returns the symbol Infinity! The possible result of Infinity is significant because it is used in Intersect to do a numerical comparison in a If expression. So, here in these deep loops, Mathematica's symbolic computation is used for numerical purposes!

So, first optimization at the superficial code form level is to get rid of this symbolic computation.

In RaySphere, instead of checking whether his disc = Sqrt[b^2 - v.v + r^2] has imaginary part, one simply check whether the argument to Sqrt is negative. After getting rid of the symbolic computation, i made the RaySphere function to be a Compiled function Here's the result:

Clear[RaySphere]; RaySphere = Compile[{o1, o2, o3, d1, d2, d3, c1, c2, c3, r}, Block[{v = {c1 - o1, c2 - o2, c3 - o3}, b = d1*(c1 - o1) + d2*(c2 - o2) + d3*(c3 - o3), discriminant = -(c1 - o1)^2 - (c2 - o2)^2 + (d1*(c1 - o1) + d2*(c2 - o2) + d3*(c3 - o3))^2 - (c3 - o3)^2 + r^2, disc, t1, t2}, If[discriminant < 0., myInfinity, disc = Sqrt[discriminant]; If[(t1 = b - disc) > 0., t1, If[(t2 = b + disc) ≤ 0., myInfinity, t2]]]]];

Note that RaySphere is now a function with 10 parameters (3 vectors and a radius). Before, RaySphere is of the form RaySphere[o_, d_, c_, r_] where each of the o, d, c, are vectors. So, this means i'll also have to change how RayTrace is called in Intersect.

I stopped my optimization at this step. The result timing is about 0.2 of the original.

The above are some fundamental things any dummy who claims to code Mathematica for speed should know. Jon has written a time series Mathematica package that he's selling commercially. So, either he got very sloppy with this Mathematica code, or he intentionally made it look bad, or that his Mathematica skill is truely beginner level. Yet he dares to talk bullshit in this thread.

Besides the above basic things, there are several aspects that his code can improve in speed. For example, he used rather complicated pattern matching in the intensive numerical computation part. Namely:

Intersect[o_, d_][{lambda_, n_}, Bound[c_, r_, s_]] Intersect[o_, d_][{lambda_, n_}, Sphere[c_, r_]]

Note that the way the parameters of Intersect defined above is a nested form. The code would be much faster if you just change the forms to:

Intersect[o_, d_, {lambda_, n_}, Bound[c_, r_, s_]] Intersect[o_, d_, {lambda_, n_}, Sphere[c_, r_]]

or even just this:

Intersect[o_, d_, lambda_, n_, c_, r_, s_] Intersect[o_, d_, lambda_, n_, c_, r_]

Also, note that the Intersect is recursive. Namely, the Intersect calls itself. Which form is invoked depends on the pattern matching of the parameters. However, not only that, inside one of the Intersect it uses Fold to nest itself. So, there are 2 recursive calls going on in Intersect. Reducing this recursion to a simple one would speed up the code possibly by a order of magnitude.

Further, if Intersect is made to take a flat sequence of argument as in Intersect[o_, d_, lambda_, n_, c_, r_, s_], then pattern matching can be avoided by making it into a pure function Function[…]. And when it is a Function[…], then Intersect or part of it may be compiled with Compile[…]. When the code is compiled, the speed should be a order of magnitude faster.

Also, he used Block, which is designed for local variables and the scope is dynamic scope. However the local vars used in this are local constants. A proper code would use With instead.

Jon Harrop's site about this raytracing code in several languages: http://www.ffconsultancy.com/languages/ray_tracer/

.

reddit thread. http://www.reddit.com/r/programming/comments/7hip4/troll_jon_harrop_thoroughly_spanked_by_troll_xah/

Advice For Mathematica Optimization

Here's some advice for Mathematica optimization, roughly from most important to less important:

- Any experienced programer knows, that optimization at the algorithm level is far more important than at the level of code construction variation. So, make sure the algorithm used is good, as opposed to doodling with your code forms. If you can optimize your algorithm, the speed up may be a order of magnitude. (for example, various algorithm for sorting algorithms illustrates this.)

- If you are doing numerical computation, always make sure that your input and every intermediate step is using machine precision. This you do by making the numbers in your input using decimal form (For example, use

1.,N[Pi]instead of1,Pi). Otherwise Mathematica will compute by exact arithmetics. - For numerical computation, do not simply slap

N[]into your code. Because the intermediate computation may still be done using exact arithmetic or symbolic computation. - Make sure your core loop, where your calculation is repeated and takes most of the time spent, is compiled, by using

Compile[…]. - When optimizing speed, try to avoid pattern matching. If your function is

f[x_]:= …, try to change it to the form off=Function[x,…]instead. - Do not use complicated patterns if not necessary. For example, use

f[x_,y_]instead off[x_][y_].

Discovered a blog F# For Scientists Misses the Boat On Mathematica Performance , by Sal Mangano. (at Source semanticvector.blogspot.com) Sal talks about how Joh Harrop bad mouth Mathematica. Jon is the guy who perpetually peddles his F# book while bad mouth every other langs in a inciting manner, in lisp, java, functional programing newsgroups, and just about every online forum and blogs he can post. Apparently he uses some media monitoring service (For example, Google Alert) that automatically notifies him any site mentioning his name or his book or Ocaml/F#, because every such blog seem to be infested with his comments.

Apparently, Jon did bad mouth in Mathematica forum before, with this very code about raytracing. (See: Source groups.google.com) Here's a summary: On , Mathematica 7 release is announce in the Mathematica newsgroup (aka MathGroup). MathGroup is a moderated forum. Someone asked a follow up question on parallel computing. Jon sidetracked it and showcases his Mathematica code on raytracing, in the same inciting manner. Daniel Lichtblau, a long time Wolfram employee and a major implementor of Mathematica, posted a relpy that essentially educated him about Mathematica optimization; it pretty much found the same problems i found in Jon's Mathematica code.

Jon's interpretation of Daniel's free tip is this:

FWIW, Wolfram Research themselves already took up my challenge with Daniel Lichtblau producing the fastest Mathematica implementation …

LOL.

Discovered that Sal Mangano is the author of a new book:

Mathematica Cookbook , by Sal Mangano. Buy at amazon .

The book cites one of my article: Concepts and Confusions of Prefix, Infix, Postfix and Lisp Notations (2006) .