Programing Language and Its Machine

let me repeat my opinion (actually, not an opinion. but thesis.).

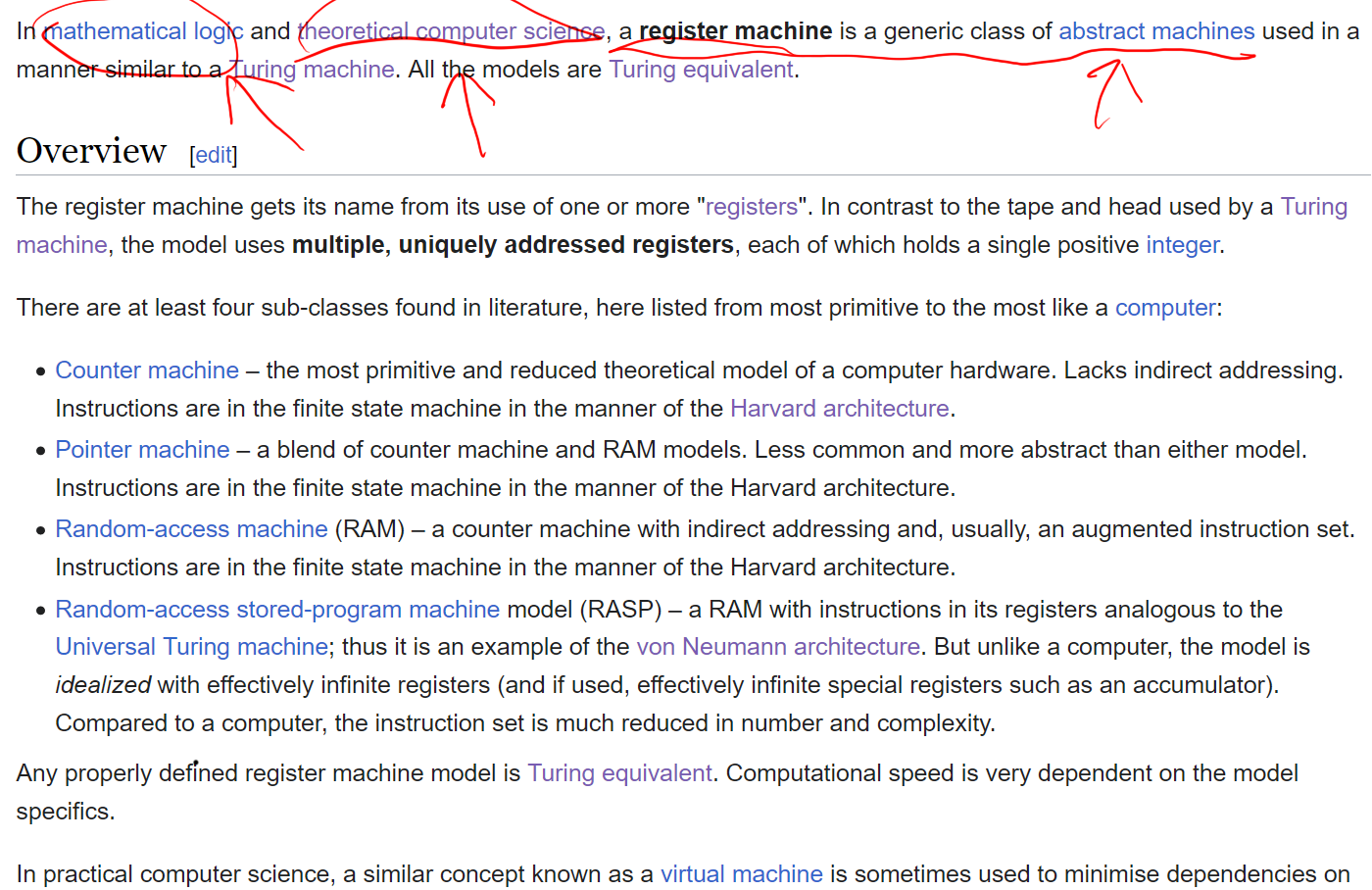

Any programing lang, has a model of computation. This model, is kinda a virtual machine, similar to, the concept of Instruction Set Architecture.

For example: lisp is based on a list processing model, and lambda as main computation. WolframLang also based on list, but also with term rewriting as method of computation. Java, is based on “objects” that holds data and how to transform those data.

However, this programing language's machine model is never explicitly specified, and Never talked about or known, by typical programers. This model, is often just implied or talked about, in the programing language's documentation.

Now, what is known, by typical coders, is the “virtual machine”, aka java's virtual machine. This virtual machine, is different, from the programing language's machine momodel. It is a lower level model.

C Is Not a Low-level Language

Interesting article. Good one.

- C Is Not a Low-level Language. Your computer is not a fast PDP-11

- By David Chisnall.

- https://queue.acm.org/detail.cfm?id=3212479

Programing Language and Its Machine

So, in recent years, i became more aware that programing language or any algorithm REQUIRES a machine to work with. This is the CPU design. This is fascinating. What machine you have changes the nature of your language entirely. It also changes the language's profile of efficiency and type of tasks.

If you are writing pseudo code or hand waving in theory then you no need machine. But as soon you actually do compute, a painstaking detailed language spec becomes necessary, and an actual machine the language is to run on.

The design of a machine dictates the shape of the programing language and what kind of tasks it does well. Example: Lisp had lisp machines. Java talked about java CPU ~1999. (Note: Java runs on Java VIRTUAL MACHINE, which in turn is translated to actual machine code.).

Turing tape is a abstract machine. Formal languages (combinators, lambda calculus), automata, are also abstract machines. Integers and set theory are also abstract machines, that math works on. As soon as you talk about step by step instruction (aka algorithm), you need a machine.

Abacus is a machine. Babbage's difference engine is a machine. 5 stones on sand, is a machine. 10 fingers, is a machine.

Virtual abstract machine is easy. It exists on paper and can be arbitrary. But actual running hardware, we have fingers to stones to metal cogwheels to vacuum tubes to solid state transistors to printed circuits to incomprehensible silicon die. And there is DNA and quantum machines.

If we just think about CPU/GPU of current era. There are lots designs (known as “architecture”). What are the possible designs? pros and cons? What are the barrier to new design? How it effect the language and computing tasks we do today.

C Lang and Go Lang Models of Computation

(following are written by Steve aka Stefano)

Kinda relevant to this discussion, I suggest the first chapter of Go Programming Language Phrasebook by David Chisnall. Buy at amazon

In few pages, it presents the abstract computer model of Go, and it compares it with the abstract model of C.

The point of a low-level language is to provide an abstract machine model to the programmer that closely reflects the architecture of the concrete machines that it will target. There is no such thing as a universal low-level language: a language that closely represents the architecture of a PDP-11 will not accurately reflect something like a modern GPU or even an old B5000 mainframe. The attraction of C has been that, in providing an abstract model similar to a PDP-11, it is similar to most cheap consumer CPUs.

Over the last decade, this abstraction has become less like the real hardware. The C abstract model represents a single processor and a single block of memory. These days, even mobile phones have multicore processors, and a programming language designed for single-processor systems requires significant effort to use effectively. It is increasingly hard for a compiler to generate machine code from C sources that efficiently uses the resources of the target system.

The aim of Go was to fill the same niche today that C fit into in the ’80s. It is a low-level language for multiprocessor development. C does not provide any primitives for communicating between threads, because C does not recognize threads; they are implemented in libraries. Go, in contrast, is designed for concurrency. It uses a form of C.A.R. Hoare's Communicating Sequential Processes (CSP) formalism to facilitate communication between goroutines.

Creating a goroutine is intended to be much cheaper than creating a thread using a typical C threading library. The main reason for this is the use of segmented stacks in Go implementations. The memory model used by early C implementations was very simple. Code was mapped (or copied) into the bottom of the address space. Heap (dynamic memory) space was put in just above the top of the program, and the stack grew down from the top of the address space. Threading complicated this. The traditional C stack was expected to be a contiguous block of memory. When you create a new thread, you need to allocate a chunk of memory big enough for the maximum stack size. Typically, that's about 1MB of RAM. This means that creating a thread requires allocating 1MB of RAM, even if the thread is only ever going to use a few KB of stack space.

Go functions are more clever. They treat the stack as a linked list of memory allocations. If there is enough space in the current stack page for their use, then they work like C functions; otherwise they will request that the stack grows. A short-lived goroutine will not use more than the 4KB initial stack allocation, so you can create a lot of them without exhausting your address space.

When you're writing single-threaded code,garbage collection is a luxury. It's nice to have, but it's not a vital feature. This changes when you start writing multithreaded code. If you are sharing pointers to an object between multiple threads, then working out exactly when you can destroy the object is incredibly hard. Even implementing something like reference counting is hard. Acquiring a reference in a thread requires an atomic increment operation, and you have to be very careful that objects aren't prematurely deallocated by race conditions. Like Java, and unlike C or C++, Go does not explicitly differentiate between stack and heap allocations. Memory is just memory. (sorry for the wall of text)

Instruction Set Architecture (ISA)

A machine, can be abstracted into “Instruction Set Architecture”. That is, a set of instructions. These makes up a machine language, the lowest form of programing language. Each instruction do things like move this stone over there, or put this number here, add 2 numbers, etc.

For example, our 10 fingers machine. Its instruction set is: R0U, R0D, R1U, R1D, …, L0U, L0D, L1U, L1D, etc. ( R means right hand. L means left hand. 0 is thumb. 1 is index finger. U means raise finger, D means down.)

One example of Instruction Set Architecture, is the x86 instruction set.

Microachitecture

Given a Instruction Set Architecture , there can be many different implementation in hardware. Design of such implementation, is called microachitecture. It involves the actual design and make of the circuits and electronics.

So now, there are two interesting things about designing a machine to run a programing language. One is Instruction Set Architecture, and sub to it is so-called microachitecture. Microachitecture is the design of the physical machine, with restrains of electronics or physical reality.

For our interest about programing language design and its bondage by the design of machine it runs on, the interesting thing is Instruction Set Architecture. It is like the Application Programing Interface to a machine.

I guess, design of CPU, has as much baggage as programing language of human habit. For language, it has to wait till a generation die off. For CPU, the thing is corp power struggle to keep their own design in use, and hard barrier to entry. (of course, there is also backward compatibility, for both)

So now when we see programing language speed comparison, we can say, in fact technically true, that it's not because haskell or functional programing are slower than C, but rather, the CPU we use today are designed to run C-like instructions.

CPU Design, Random Notes

Apparently, there is a completely different CPU design, 1980s, called Transputer, which are chips designed to be connected together for parallel computing. The language for it is Occam. Very nice. The language is more tight to the CPU than C is to x86 chips.

In this foray of relations of algorithm and machine, i learned quite a lot bits. Many familiar terms: ISA CISC RISC x86 XEON MIPS ECC RAM, superscalar, instruction level parallelism, FLOPS, now i understand in a coherent way.

CPU Overviews

Instruction Set Architecture (ISA)

In computer science, an instruction set architecture (ISA) is an abstract model of a computer. It is also referred to as architecture or computer architecture. A realization of an ISA, such as a central processing unit (CPU), is called an implementation.

In general, an ISA defines the supported data types, the registers, the hardware support for managing main memory, fundamental features (such as the memory consistency, addressing modes, virtual memory), and the input/output model of a family of implementations of the ISA.

An ISA specifies the behavior of machine code running on implementations of that ISA in a fashion that does not depend on the characteristics of that implementation, providing binary compatibility between implementations. This enables multiple implementations of an ISA that differ in performance, physical size, and monetary cost (among other things), but that are capable of running the same machine code, so that a lower-performance, lower-cost machine can be replaced with a higher-cost, higher-performance machine without having to replace software. It also enables the evolution of the microarchitectures of the implementations of that ISA, so that a newer, higher-performance implementation of an ISA can run software that runs on previous generations of implementations.

[2021-03-05 Wikipedia Instruction set architecture]

Microarchitecture

In computer engineering, microarchitecture is the way a given instruction set architecture (ISA) is implemented in a particular processor. A given ISA may be implemented with different microarchitectures; implementations may vary due to different goals of a given design or due to shifts in technology.

Computer architecture is the combination of microarchitecture and instruction set architecture.

[2021-03-05 Wikipedia Microarchitecture]

Complex Instruction Set Computer (CISC)

A complex instruction set computer (CISC /ˈsɪsk/) is a computer in which single instructions can execute several low-level operations (such as a load from memory, an arithmetic operation, and a memory store) or are capable of multi-step operations or addressing modes within single instructions. The term was retroactively coined in contrast to reduced instruction set computer (RISC)[1] and has therefore become something of an umbrella term for everything that is not RISC, where the typical differentiating characteristic is that most RISC designs use uniform instruction length for almost all instructions, and employ strictly separate load and store instructions.

Examples of CISC architectures include complex mainframe computers to simplistic microcontrollers where memory load and store operations are not separated from arithmetic instructions. Specific instruction set architectures that have been retroactively labeled CISC are System/360 through z/Architecture, the PDP-11 and VAX architectures, Data General Nova and many others. Well known microprocessors and microcontrollers that have also been labeled CISC in many academic publications include the Motorola 6800, 6809 and 68000-families; the Intel 8080, iAPX432 and x86-family; the Zilog Z80, Z8 and Z8000-families; the National Semiconductor 32016 and NS320xx-line; the MOS Technology 6502-family; the Intel 8051-family; and others.

Some designs have been regarded as borderline cases by some writers. For instance, the Microchip Technology PIC has been labeled RISC in some circles and CISC in others. The 6502 and 6809 have both been described as RISC-like, although they have complex addressing modes as well as arithmetic instructions that operate on memory, contrary to the RISC principles.

[2021-03-05 Wikipedia Complex instruction set computer]

Reduced Instruction Set Computer (RISC)

A reduced instruction set computer, or RISC (/rɪsk/), is a computer with a small, highly optimized set of instructions, rather than the more specialized set often found in other types of architecture, such as in a complex instruction set computer (CISC). The main distinguishing feature of RISC architecture is that the instruction set is optimized with a large number of registers and a highly regular instruction pipeline, allowing a low number of clock cycles per instruction (CPI). Core features of a RISC philosophy are a load/store architecture, in which memory is accessed through specific instructions rather than as a part of most instructions in the set, and requiring only single-cycle instructions.

Although a number of computers from the 1960s and 1970s have been identified as forerunners of RISCs, the modern concept dates to the 1980s. In particular, two projects at Stanford University and the University of California, Berkeley are most associated with the popularization of this concept. Stanford's MIPS would go on to be commercialized as the successful MIPS architecture, while Berkeley's RISC gave its name to the entire concept and was commercialized as the SPARC. Another success from this era was IBM's effort that eventually led to the IBM POWER instruction set architecture, PowerPC, and Power ISA. As these projects matured, a variety of similar designs flourished in the late 1980s and especially the early 1990s, representing a major force in the Unix workstation market as well as for embedded processors in laser printers, routers and similar products.

The many varieties of RISC designs include ARC, Alpha, Am29000, ARM, Atmel AVR, Blackfin, i860, i960, M88000, MIPS, PA-RISC, Power ISA (including PowerPC), RISC-V, SuperH, and SPARC. The use of ARM architecture processors in smartphones and tablet computers such as the iPad and Android devices provided a wide user base for RISC-based systems. RISC processors are also used in supercomputers, such as Fugaku, which, as of June 2020, is the world's fastest supercomputer.

[2021-03-05 Wikipedia RISC]

RISC Chips

ARM CPU

ARM (previously an acronym for Advanced RISC Machines and originally Acorn RISC Machine) is a family of reduced instruction set computing (RISC) architectures for computer processors, configured for various environments. Arm Ltd. develops the architecture and licenses it to other companies, who design their own products that implement one of those architectures—including systems-on-chips (SoC) and systems-on-modules (SoM) that incorporate different components such as memory, interfaces, and radios. It also designs cores that implement this instruction set and licenses these designs to a number of companies that incorporate those core designs into their own products.

There have been several generations of the ARM design. The original ARM1 used a 32-bit internal structure but had a 26-bit address space that limited it to 64 MB of main memory. This limitation was removed in the ARMv3 series, which has a 32-bit address space, and several additional generations up to ARMv7 remained 32-bit. Released in 2011, the ARMv8-A architecture added support for a 64-bit address space and 64-bit arithmetic with its new 32-bit fixed-length instruction set.[3] Arm Ltd. has also released a series of additional instruction sets for different rules; the "Thumb" extension adds both 32- and 16-bit instructions for improved code density, while Jazelle added instructions for directly handling Java bytecodes, and more recently, JavaScript. More recent changes include the addition of simultaneous multithreading (SMT) for improved performance or fault tolerance.[4]

Due to their low costs, minimal power consumption, and lower heat generation than their competitors, ARM processors are desirable for light, portable, battery-powered devices — including smartphones, laptops and tablet computers, as well as other embedded systems. However, ARM processors are also used for desktops and servers, including the world's fastest supercomputer. With over 180 billion ARM chips produced, as of 2021, ARM is the most widely used instruction set architecture (ISA) and the ISA produced in the largest quantity. Currently, the widely used Cortex cores, older “classic” cores, and specialised SecurCore cores variants are available for each of these to include or exclude optional capabilities.

[2021-03-05 Wikipedia ARM architecture]

Other notale RISC chips:

- RISC-V

- MIPS architecture

- Stanford MIPS

Apple M1 Chip

The X86 CISC Pestilence

Intel Pentium CPU

Pentium is a brand used for a series of x86 architecture-compatible microprocessors produced by Intel since 1993. In their form as of November 2011, Pentium processors are considered entry-level products that Intel rates as "two stars",[1] meaning that they are above the low-end Atom and Celeron series, but below the faster Intel Core lineup, and workstation Xeon series.

[2021-03-05 Wikipedia Pentium]

Intel Core CPU

Intel Core are streamlined midrange consumer, workstation and enthusiast computers central processing units (CPU) marketed by Intel Corporation. These processors displaced the existing mid- to high-end Pentium processors at the time of their introduction, moving the Pentium to the entry level, and bumping the Celeron series of processors to the low end. Identical or more capable versions of Core processors are also sold as Xeon processors for the server and workstation markets.

As of June 2017, the lineup of Core processors includes the Intel Core i3, Intel Core i5, Intel Core i7, and Intel Core i9, along with the X-series of Intel Core CPUs.

Intel Xeon CPU

Xeon (/ˈziːɒn/ ZEE-on) is a brand of x86 microprocessors designed, manufactured, and marketed by Intel, targeted at the non-consumer workstation, server, and embedded system markets. It was introduced in June 1998. Xeon processors are based on the same architecture as regular desktop-grade CPUs, but have advanced features such as support for ECC memory, higher core counts, support for larger amounts of RAM, larger cache memory and extra provision for enterprise-grade reliability, availability and serviceability (RAS) features responsible for handling hardware exceptions through the Machine Check Architecture. They are often capable of safely continuing execution where a normal processor cannot due to these extra RAS features, depending on the type and severity of the machine-check exception (MCE). Some also support multi-socket systems with two, four, or eight sockets through use of the Quick Path Interconnect (QPI) bus.

Some shortcomings that make Xeon processors unsuitable for most consumer-grade desktop PCs include lower clock rates at the same price point (since servers run more tasks in parallel than desktops, core counts are more important than clock rates), usually an absence of an integrated graphics processing unit (GPU), and lack of support for overclocking. Despite such disadvantages, Xeon processors have always had popularity among some desktop users (video editors and other power users), mainly due to higher core count potential, and higher performance to price ratio vs. the Core i7 in terms of total computing power of all cores. Since most Intel Xeon CPUs lack an integrated GPU, systems built with those processors require a discrete graphics card or a separate GPU if computer monitor output is desired.[1]

[2021-03-05 Wikipedia Xeon]

AMD Ryzen CPU

Ryzen (/ˈraɪzən/ RY-zən) is a brand of x86-64 microprocessors designed and marketed by Advanced Micro Devices (AMD) for desktop, mobile, server, and embedded platforms based on the Zen microarchitecture. It consists of central processing units (CPUs) marketed for mainstream, enthusiast, server, and workstation segments and accelerated processing units (APUs) marketed for mainstream and entry-level segments and embedded systems applications. Ryzen is especially significant for AMD since it was a completely new design and marked the corporation's return to the high-end CPU market after many years of near total absence.

[2021-03-05 Wikipedia Ryzen]

Intel x86 must die

x86

x86 is a family of instruction set architectures[a] initially developed by Intel based on the Intel 8086 microprocessor and its 8088 variant. The 8086 was introduced in 1978 as a fully 16-bit extension of Intel's 8-bit 8080 microprocessor, with memory segmentation as a solution for addressing more memory than can be covered by a plain 16-bit address. The term "x86" came into being because the names of several successors to Intel's 8086 processor end in "86", including the 80186, 80286, 80386 and 80486 processors.

Many additions and extensions have been added to the x86 instruction set over the years, almost consistently with full backward compatibility.[b] The architecture has been implemented in processors from Intel, Cyrix, AMD, VIA Technologies and many other companies; there are also open implementations, such as the Zet SoC platform (currently inactive).[2] Nevertheless, of those, only Intel, AMD, VIA Technologies, and DM&P Electronics hold x86 architectural licenses, and from these, only the first two are actively producing modern 64-bit designs.

The term is not synonymous with IBM PC compatibility, as this implies a multitude of other computer hardware; embedded systems, and general-purpose computers, used x86 chips before the PC-compatible market started,[c] some of them before the IBM PC (1981) debut.

As of 2021, most personal computers, laptops and game consoles sold are based on the x86 architecture, while mobile categories such as smartphones or tablets are dominated by ARM; at the high end, x86 continues to dominate compute-intensive workstation and cloud computing segments.[3]

[2021-03-05 Wikipedia X86]

x86-64 (also known as x64, x86_64, AMD64 and Intel 64)[note 1] is a 64-bit version of the x86 instruction set, first released in 1999. It introduced two new modes of operation, 64-bit mode and compatibility mode, along with a new 4-level paging mode.

x86/CISC induced hacks:

- Instruction-level parallelism

- Speculative execution

All Software, Need to be Rewritten Again, and Again

Before, whenever new chip comes into pop product, such as Apple switching to PowerPC in 1994, then to Intel in 2006, or the rise of ARM CPU in smart phones, i read that all software needs to be rewritten. Was always annoyed and shocked, and don't know why. Now i know.

Because: algorithm is fundamentally meaningful only to a specific machine.

Note, new CPU design will be inevitable. That means, all software, need to be rewritten again. (practically, needs to be recompiled. the compilers need to be rewritten. This take years to mature. And, new language may be invented to better fit describing instructions for the new machine.)

Dedicated Hardware is a Magnitude Speedup

What if we don't rewrite instead rely on a emulation layer? A magnitude slower! That's why, IBM Deep Blue chess had its own dedicated chips, audio want its own Digital Signal Processor (DSP), Google invent its own Tensor Processing Unit (TPU) for machine learning. And video games need Graphics Processing Unit (GPU).

I was playing Second Life (3D virtual world, like 3d video game) around 2007 to 2010, and don't have a dedicated graphics card. I thought, if i just turn all settings to minimal, i'll be fine. No matter what i tried, 'was super slow, no comparison to anyone who just has a basic gpu.

〔see Xah Second Life〕

back in 1990s, i see some SGI machine (Silicon Graphics) demo of its 3d prowess. Or, in 1999 a gamer coworker is showing me his latest fancy graphics card with a bunch of jargons, something something texture, something something shawdowing. But i asked him about doing a 3D raytracing, turns out it has 0 speedup, take hours to render 1 picture. I am greatly puzzled. how is it possible, some fantastic expensive graphics machine yet useless when rendering 3d??

Now i know. The tasks GPU is designed to do is in general different from raytracing 3d scenes. Showing pre-recorded video (e.g. watching movie on YouTube), rendering 3d video game scenes, drawing 2d windows in computer screen (your browser), manipulate photos in photoshop, and lots other computation that we all think of as graphics, actually are all very different in terms of creating a dedicated hardware to run those algorithms.

See also: CUDA

See Also

interesting read

- Every 7.8μs your computer's memory has a hiccup

- By Marek Majkowski.

- https://blog.cloudflare.com/every-7-8us-your-computers-memory-has-a-hiccup/

2018-12-08 https://spectrum.ieee.org/tech-history/silicon-revolution/25-microchips-that-shook-the-world

CPU hidden instructions 〔Breakingthe x86 ISA By Christopher Domas. @ githubusercontent.com/xoreaxeaxeax〕

Models of Computation

Programing Languages and their Machines

- Programing Language and Its Machine

- Programing Languages and Their Computational Models

- Costs of Programing Language Operations

- Why C Language Has the Main Function

- What is Closure in Programing Language (2012)

- Computer Science, Modeling Modern Software and Hardware on an Abacus

- Why is Array Access Constant Time

- On GPU vs CPU Algorithms Compilation, Cpp, Parallel Computation, Wolfram Physics (2023)