On GPU vs CPU Algorithms Compilation, Cpp, Parallel Computation, Wolfram Physics (2023)

on GPU vs CPU algorithms compilation, C++, parallel computation, Wolfram Physics

by BruceKnowsHow, 2023-05-22

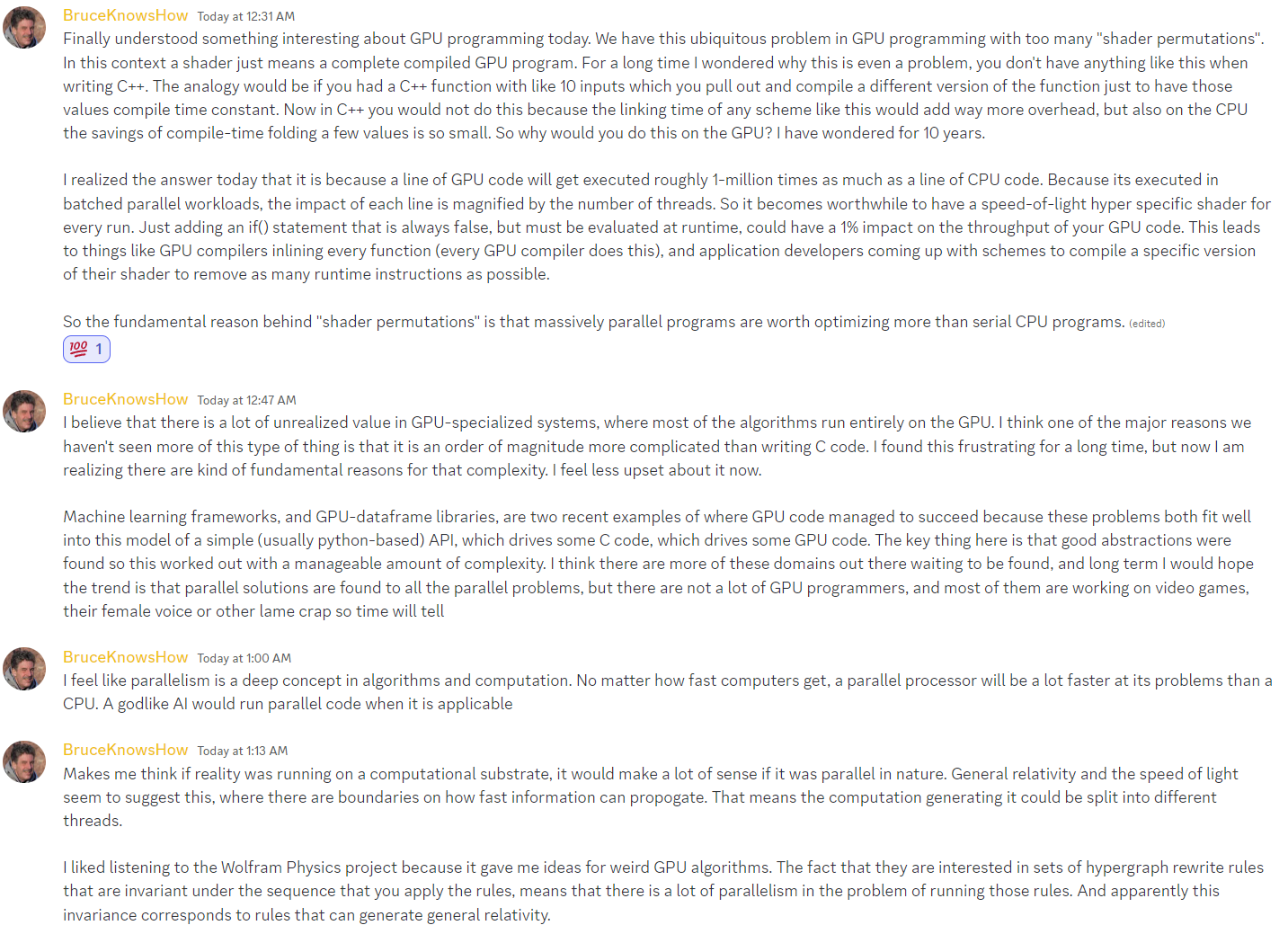

- Finally understood something interesting about GPU programming today.

- We have this ubiquitous problem in GPU programming with too many "shader permutations".

- In this context a shader just means a complete compiled GPU program.

- For a long time I wondered why this is even a problem, you don't have anything like this when writing C++.

- The analogy would be if you had a C++ function with like 10 inputs which you pull out and compile a different version of the function just to have those values compile time constant.

- Now in C++ you would not do this because the linking time of any scheme like this would add way more overhead, but also on the CPU the savings of compile-time folding a few values is so small.

- So why would you do this on the GPU?

- I have wondered for 10 years.

- I realized the answer today that it is because a line of GPU code will get executed roughly I-million times as much as a line of CPU code.

- Because its executed in batched parallel workloads, the impact of each line is magnified by the number of threads.

- So it becomes worthwhile to have a speed-of-light hyper specific shader for every run.

- Just adding an if() statement that is always false, but must be evaluated at runtime, could have a 1% impact on the throughput Of your GPU code.

- This leads to things like GPU compilers inlining every function (every GPU compiler does this), and application developers coming up with schemes to compile a specific version of their shader to remove as many runtime instructions as possible.

- So the fundamental reason behind "shader permutations" is that massively parallel programs are worth optimizing more than serial CPU programs.

- I believe that there is a lot of unrealized value in GPU-specialized systems, where most of the algorithms run entirely on the GPU.

- I think one of the major reasons we haven't seen more Of this type Of thing is that it is an order Of magnitude more complicated than writing C code.

- I found this frustrating for a long time, but now I am realizing there are kind of fundamental reasons for that complexity.

- I feel less upset about it now.

- Machine learning frameworks, and GPU-dataframe libraries, are two recent examples of where GPU code managed to succeed because these problems both fit well into this model of a simple (usually python-based) API, which drives some C code, which drives some GPU code.

- The key thing here is that good abstractions were found so this worked out with a manageable amount of complexity.

- I think there are more of these domains out there waiting to be found, and long term I would hope the trend is that parallel solutions are found to all the parallel problems, but there are not a lot of GPU programmers, and most of them are working on video games, their female voice or other lame crap so time will tell

- I feel like parallelism is a deep concept in algorithms and computation.

- No matter how fast computers get, a parallel processor will be a lot faster at its problems than a CPU.

- A godlike A1 would run parallel code when it is applicable

- Makes me think if reality was running on a computational substrate, it would make a lot of sense if it was parallel in nature.

- General relativity and the speed of light seem to suggest this, where there are boundaries on how fast information can propogate.

- That means the computation generating it could be split into different threads.

- I liked listening to the Wolfram Physics project because it gave me ideas for weird GPU algorithms.

- The fact that they are interested in sets of hypergraph rewrite rules that are invariant under the sequence that you apply the rules, means that there is a lot of parallelism in the problem of running those rules.

- And apparently this invariance corresponds to rules that can generate general relativity.

Grok explains

### Overview of the Article

- The article, titled "on GPU vs CPU algorithms compilation, C++, parallel computation, Wolfram Physics," is a reflective discussion formatted as a series of chat messages from a user named "BruceKnowsHow." It explores why GPU programming often involves more aggressive optimizations than CPU programming, particularly for algorithms in parallel computing.

- The core idea revolves around how GPUs handle massive parallelism differently from CPUs, making certain techniques—like compiling specialized versions of code—highly valuable for performance gains.

- The piece ties this into broader implications for fields like machine learning and even theoretical physics, emphasizing the untapped potential of parallel algorithms.

### Key Realization: Shader Permutations and Why GPUs Need Them

- The article kicks off with a personal "aha" moment about "shader permutations." In GPU programming, developers often compile multiple tailored versions of their code (called shaders) for different scenarios, treating inputs as compile-time constants to eliminate unnecessary runtime checks.

- This seems wasteful at first—why not just write flexible code with if-statements?—but it's justified because GPUs execute code in huge batches, running the same line millions of times in parallel.

- A tiny inefficiency, like an always-false `if()` statement evaluated at runtime, can shave off 1% of throughput across the entire workload.

- On CPUs, which process tasks more serially, such optimizations yield negligible benefits and add compilation overhead, making them impractical.

- In essence, the author argues: a single line of GPU code gets executed roughly **1 million times more often** than the equivalent on a CPU due to this batched parallelism.

- This amplifies the payoff of hyper-optimized, "permuted" shaders.

### GPU vs. CPU: Core Differences in Algorithm Execution

The discussion contrasts the two architectures head-on:

- **GPUs**: Built for parallel workloads, like rendering graphics or training neural networks.

- They thrive on simple, repetitive tasks where thousands of threads run the same code simultaneously.

- Compilers can inline everything aggressively, and developers exploit this by pre-compiling variants to strip out dead code.

- **CPUs**: Optimized for sequential, general-purpose tasks with branching logic.

- Here, compile-time tricks like constant folding (simplifying expressions known at compile time) save little time, and the linking overhead from permutations outweighs any gains.

- This leads to a deeper insight: GPU algorithms succeed when problems fit a "simple API → C code → GPU code" pipeline, where abstractions hide the complexity.

- CPU algorithms, by contrast, don't demand such specialization because execution frequency is lower.

### Examples of GPU-Winning Algorithms

The article doesn't dive into code snippets but highlights real-world successes:

- **Machine Learning Frameworks** (e.g., TensorFlow or PyTorch): These use Python APIs to orchestrate C++ backends that feed data to GPUs.

- Parallel matrix multiplications and convolutions shine here, as GPUs crunch massive datasets far faster than CPUs.

- **GPU-Dataframe Libraries** (like RAPIDS cuDF): Similar to Pandas but GPU-accelerated, they parallelize data operations like filtering or joins, leveraging the same batched model.

- It also nods to the **Wolfram Physics Project**, where hypergraph rewrite rules (a way to model the universe computationally) are sequence-independent, hinting at inherent parallelism ripe for GPU implementation.

- No specific benchmarks are given, but the 1% throughput hit from a bad `if()` serves as a proxy for how sensitive GPU algos are to inefficiencies.

### Broader Insights and Philosophical Ties

- Beyond tech, the author muses on parallelism as a "deep concept" in computation:

- GPUs outpace CPUs on parallelizable problems, but few domains beyond games and AI fully exploit this—there's huge potential for more "GPU-specialized systems."

- An advanced AI would intuitively choose parallel code when it fits, much like humans pick tools for tasks.

- Tying into physics: If the universe is a "computational substrate" (like Wolfram's model), it might run parallel by nature, with the speed of light as a parallelism boundary (preventing instant info sharing across distances, akin to relativity).

- GPU programming is "an order of magnitude more complicated" than C++, but that's not a flaw—it's a feature born from the architecture's power.

### Conclusions and Takeaways

- The piece ends optimistically, hoping for more breakthroughs in parallel algos despite the scarcity of GPU experts (many stuck in gaming).

- It doesn't prescribe fixes but implies: Look for problems with batched, uniform computations—they're GPU gold.

- If you're into parallel computing, this article underscores why GPUs aren't just "fast hardware" but a mindset shift for algorithm design.

- Overall, it's a concise, insightful read blending engineering with big-picture thinking, perfect for devs pondering why their CUDA code feels so finicky compared to vanilla C++.

Programing Languages and their Machines

- Programing Language and Its Machine

- Programing Languages and Their Computational Models

- Costs of Programing Language Operations

- Why C Language Has the Main Function

- What is Closure in Programing Language (2012)

- Computer Science, Modeling Modern Software and Hardware on an Abacus

- Why is Array Access Constant Time

- On GPU vs CPU Algorithms Compilation, Cpp, Parallel Computation, Wolfram Physics (2023)