Mathematical Logic, Learning Notes

Mathematical logic is often divided into the fields of

- set theory

- model theory

- Computability theory (aka recursion theory)

- proof theory

These areas share basic results on logic, particularly first-order logic, and definability. In computer science (particularly in the ACM Classification) mathematical logic encompasses additional topics not detailed in this article; see Logic in computer science for those.

Since its inception, mathematical logic has both contributed to, and has been motivated by, the study of foundations of mathematics. This study began in the late 19th century with the development of axiomatic frameworks for geometry, arithmetic, and analysis. In the early 20th century it was shaped by David Hilbert's program to prove the consistency of foundational theories. Results of Kurt Gödel, Gerhard Gentzen, and others provided partial resolution to the program, and clarified the issues involved in proving consistency. Work in set theory showed that almost all ordinary mathematics can be formalized in terms of sets, although there are some theorems that cannot be proven in common axiom systems for set theory. Contemporary work in the foundations of mathematics often focuses on establishing which parts of mathematics can be formalized in particular formal systems (as in reverse mathematics) rather than trying to find theories in which all of mathematics can be developed.

First-order logic

First-order logic — also known as first-order predicate calculus and predicate logic — is a collection of formal systems used in mathematics, philosophy, linguistics, and computer science. First-order logic uses quantified variables over non-logical objects and allows the use of sentences that contain variables, so that rather than propositions such as Socrates is a man one can have expressions in the form “there exists X such that X is Socrates and X is a man” and there exists is a quantifier while X is a variable. [1] This distinguishes it from propositional logic, which does not use quantifiers or relations.[2]

model theory

In mathematics, model theory is the study of classes of mathematical structures (e.g. groups, fields, graphs, universes of set theory) from the perspective of mathematical logic. The objects of study are models of theories in a formal language. A set of sentences in a formal language is called a theory; a model of a theory is a structure (e.g. an interpretation) that satisfies the sentences of that theory.

Model theory recognises and is intimately concerned with a duality: it examines semantical elements (meaning and truth) by means of syntactical elements (formulas and proofs) of a corresponding language. To quote the first page of Chang & Keisler (1990):[1]

• universal algebra + logic = model theory.

Model theory developed rapidly during the 1990s, and a more modern definition is provided by Wilfrid Hodges (1997):

• model theory = algebraic geometry − fields,

although model theorists are also interested in the study of fields. Other nearby areas of mathematics include combinatorics, number theory, arithmetic dynamics, analytic functions, and non-standard analysis.

In a similar way to proof theory, model theory is situated in an area of interdisciplinarity among mathematics, philosophy, and computer science. The most prominent professional organization in the field of model theory is the Association for Symbolic Logic.

Computability theory (aka recursion theory)

Computability theory, also called recursion theory, is a branch of mathematical logic, of computer science, and of the theory of computation that originated in the 1930s with the study of computable functions and Turing degrees. The field has since grown to include the study of generalized computability and definability. In these areas, recursion theory overlaps with proof theory and effective descriptive set theory.

The basic questions addressed by recursion theory are “What does it mean for a function on the natural numbers to be computable?” and “How can noncomputable functions be classified into a hierarchy based on their level of noncomputability?”. The answers to these questions have led to a rich theory that is still being actively researched.

Recursion theorists in mathematical logic often study the theory of relative computability, reducibility notions and degree structures described in this article. This contrasts with the theory of subrecursive hierarchies, formal methods and formal languages that is common in the study of computability theory in computer science. There is considerable overlap in knowledge and methods between these two research communities, however, and no firm line can be drawn between them.

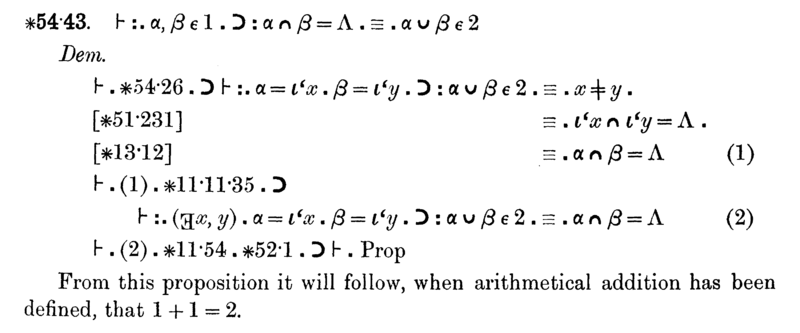

Proof theory

Proof theory is a major branch[1] of mathematical logic that represents proofs as formal mathematical objects, facilitating their analysis by mathematical techniques. Proofs are typically presented as inductively-defined data structures such as plain lists, boxed lists, or trees, which are constructed according to the axioms and rules of inference of the logical system. As such, proof theory is syntactic in nature, in contrast to model theory, which is semantic in nature.

Some of the major areas of proof theory include structural proof theory, ordinal analysis, provability logic, reverse mathematics, proof mining, automated theorem proving, and proof complexity. Much research also focuses on applications in computer science, linguistics, and philosophy.

type theory

In mathematics, logic, and computer science, a type theory is any of a class of formal systems, some of which can serve as alternatives to set theory as a foundation for all mathematics. In type theory, every “term” has a “type” and operations are restricted to terms of a certain type.

Type theory is closely related to (and in some cases overlaps with) type systems, which are a programming language feature used to reduce bugs. Type theory was created to avoid paradoxes in a variety of formal logics and rewrite systems.

Two well-known type theories that can serve as mathematical foundations are Alonzo Church's typed λ-calculus and Per Martin-Löf's intuitionistic type theory.

Sequent calculus

Sequent calculus is, in essence, a style of formal logical argumentation where every line of a proof is a conditional tautology (called a sequent by Gerhard Gentzen) instead of an unconditional tautology. Each conditional tautology is inferred from other conditional tautologies on earlier lines in a formal argument according to rules and procedures of inference, giving a better approximation to the style of natural deduction used by mathematicians than David Hilbert's earlier style of formal logic where every line was an unconditional tautology. There may be more subtle distinctions to be made; for example, there may be non-logical axioms upon which all propositions are implicitly dependent. Then sequents signify conditional theorems in a first-order language rather than conditional tautologies.

Sequent calculus is one of several extant styles of proof calculus for expressing line-by-line logical arguments.

- * Hilbert style. Every line is an unconditional tautology (or theorem).

- * Gentzen style. Every line is a conditional tautology (or theorem) with zero or more conditions on the left.

- * Natural deduction. Every (conditional) line has exactly one asserted proposition on the right.

- * Sequent calculus. Every (conditional) line has zero or more asserted propositions on the right.

In other words, natural deduction and sequent calculus systems are particular distinct kinds of Gentzen-style systems. Hilbert-style systems typically have a very small number of inference rules, relying more on sets of axioms. Gentzen-style systems typically have very few axioms, if any, relying more on sets of rules.

Gentzen-style systems have significant practical and theoretical advantages compared to Hilbert-style systems. For example, both natural deduction and sequent calculus systems facilitate the elimination and introduction of universal and existential quantifiers so that unquantified logical expressions can be manipulated according to the much simpler rules of propositional calculus. In a typical argument, quantifiers are eliminated, then propositional calculus is applied to unquantified expressions (which typically contain free variables), and then the quantifiers are reintroduced. This very much parallels the way in which mathematical proofs are carried out in practice by mathematicians. Predicate calculus proofs are generally much easier to discover with this approach, and are often shorter. Natural deduction systems are more suited to practical theorem-proving. Sequent calculus systems are more suited to theoretical analysis.

Calculus of constructions

In mathematical logic and computer science, the calculus of constructions (CoC) is a type theory created by Thierry Coquand. It can serve as both a typed programming language and as constructive foundation for mathematics. For this second reason, the CoC and its variants have been the basis for Coq and other proof assistants.

Some of its variants include the calculus of inductive constructions (which adds inductive types), the calculus of (co)inductive constructions (which adds coinduction), and the predicative calculus of inductive constructions (which removes some impredicativity).

The CoC is a higher-order typed lambda calculus, initially developed by Thierry Coquand. It is well known for being at the top of Barendregt's lambda cube. It is possible within CoC to define functions from, say, integers to types, types to types as well as functions from integers to integers.

The Calculus of Constructions can be considered an extension of the Curry–Howard isomorphism. The Curry–Howard isomorphism associates a term in the simply typed lambda calculus with each natural-deduction proof in intuitionistic propositional logic. The Calculus of Constructions extends this isomorphism to proofs in the full intuitionistic predicate calculus, which includes proofs of quantified statements (which we will also call “propositions”).

dependent type

In computer science and logic, a dependent type is a type whose definition depends on a value. A “pair of integers” is a type. A “pair of integers where the second is greater than the first” is a dependent type because of the dependence on the value. It is an overlapping feature of type theory and type systems. In intuitionistic type theory, dependent types are used to encode logic's quantifiers like “for all” and “there exists”. In functional programming languages like Agda, ATS, Coq, Epigram, Idris, and Shen, dependent types prevent bugs by allowing extremely expressive types.

Two common examples of dependent types are dependent functions and dependent pairs. A dependent function's return type may depend on the value (not just type) of an argument. A function that takes a positive integer “n” may return an array of length “n”. (Note that this is different from polymorphism and generic programming, both of which include the type as an argument.) A dependent pair may have a second value that depends on the first. It can be used to encode a pair of integers where the second one is greater than the first.

Dependent types add complexity to a type system. Deciding the equality of dependent types in a program may require computations. If arbitrary values are allowed in dependent types, then deciding type equality may involve deciding whether two arbitrary programs produce the same result; hence type checking may become undecidable.

Dependent types were created to deepen the connection between programming and logic.[clarification needed]

In 1934, Haskell Curry noticed that the types used in typed lambda calculus, and in its combinatory logic counterpart, followed the same pattern as axioms in propositional logic. Going further, for every proof in the logic, there was a matching function (term) in the programming language. One of Curry's examples was the correspondence between simply typed lambda calculus and intuitionistic logic.[1]

Predicate logic is an extension of propositional logic, adding quantifiers. Howard and de Bruijn extended lambda calculus to match this more powerful logic by creating types for dependent functions, which correspond to "for all", and dependent pairs, which correspond to "there exists".[2]

(Because of this and other work by Howard, propositions-as-types is known as the Curry-Howard correspondence.)

Kleene–Rosser paradox

In mathematics, the Kleene–Rosser paradox is a paradox that shows that certain systems of formal logic are inconsistent, in particular the version of Curry's combinatory logic introduced in 1930, and Church's original lambda calculus, introduced in 1932–1933, both originally intended as systems of formal logic. The paradox was exhibited by Stephen Kleene and J. B. Rosser in 1935. The paradox

Kleene and Rosser were able to show that both systems are able to characterize and enumerate their provably total, definable number-theoretic functions, which enabled them to construct a term that essentially replicates the Richard paradox in formal language.

Curry later managed to identify the crucial ingredients of the calculi that allowed the construction of this paradox, and used this to construct a much simpler paradox, now known as Curry's paradox.

Richard paradox

In logic, Richard's paradox is a semantical antinomy of set theory and natural language first described by the French mathematician Jules Richard in 1905. The paradox is ordinarily used to motivate the importance of distinguishing carefully between mathematics and metamathematics. Kurt Gödel specifically cites Richard's antinomy as a semantical analogue to his syntactical incompleteness result in the introductory section of “On Formally Undecidable Propositions in Principia Mathematica and Related Systems I”. The paradox was also a motivation of the development of predicative mathematics.

The original statement of the paradox, due to Richard (1905), is strongly related to Cantor's diagonal argument on the uncountability of the set of real numbers.

The paradox begins with the observation that certain expressions of natural language define real numbers unambiguously, while other expressions of natural language do not. For example, “The real number the integer part of which is 17 and the nth decimal place of which is 0 if n is even and 1 if n is odd” defines the real number 17.1010101… = 1693/99, while the phrase “the capital of England” does not define a real number.

Thus there is an infinite list of English phrases (such that each phrase is of finite length, but lengths vary in the list) that define real numbers unambiguously. We first arrange this list of phrases by increasing length, then order all phrases of equal length lexicographically (in dictionary order), so that the ordering is canonical. This yields an infinite list of the corresponding real numbers: r1, r2, ... . Now define a new real number r as follows. The integer part of r is 0, the nth decimal place of r is 1 if the nth decimal place of rn is not 1, and the nth decimal place of r is 2 if the nth decimal place of rn is 1.

The preceding two paragraphs are an expression in English that unambiguously defines a real number r. Thus r must be one of the numbers rn. However, r was constructed so that it cannot equal any of the rn. This is the paradoxical contradiction.

Richard's paradox results in an untenable contradiction, which must be analyzed to find an error.

The proposed definition of the new real number r clearly includes a finite sequence of characters, and hence it seems at first to be a definition of a real number. However, the definition refers to definability-in-English itself. If it were possible to determine which English expressions actually do define a real number, and which do not, then the paradox would go through. Thus the resolution of Richard's paradox is that there is not any way to unambiguously determine exactly which English sentences are definitions of real numbers (see Good 1966). That is, there is not any way to describe in a finite number of words how to tell whether an arbitrary English expression is a definition of a real number. This is not surprising, as the ability to make this determination would also imply the ability to solve the halting problem and perform any other non-algorithmic calculation that can be described in English.

combinatory logic

Combinatory logic is a notation to eliminate the need for quantified variables in mathematical logic. It was introduced by Moses Schönfinkel[1] and Haskell Curry, [2] and has more recently been used in computer science as a theoretical model of computation and also as a basis for the design of functional programming languages. It is based on combinators. A combinator is a higher-order function that uses only function application and earlier defined combinators to define a result from its arguments.

ramsey theory

Ramsey theory, named after the British mathematician and philosopher Frank P. Ramsey, is a branch of mathematics that studies the conditions under which order must appear. Problems in Ramsey theory typically ask a question of the form: “how many elements of some structure must there be to guarantee that a particular property will hold?”

Examples[edit]

A typical result in Ramsey theory starts with some mathematical structure that is then cut into pieces. How big must the original structure be in order to ensure that at least one of the pieces has a given interesting property? This idea can be defined as partition regularity.

For example, consider a complete graph of order n; that is, there are n vertices and each vertex is connected to every other vertex by an edge. A complete graph of order 3 is called a triangle. Now colour every edge red or blue. How large must n be in order to ensure that there is either a blue triangle or a red triangle? It turns out that the answer is 6. See the article on Ramsey's theorem for a rigorous proof.

Another way to express this result is as follows: at any party with at least six people, there are three people who are all either mutual acquaintances (each one knows the other two) or mutual strangers (each one does not know either of the other two). See theorem on friends and strangers.

This also is a special case of Ramsey's theorem, which says that for any given integer c, any given integers n1,...,nc, there is a number, R(n1,...,nc), such that if the edges of a complete graph of order R(n1,...,nc) are coloured with c different colours, then for some i between 1 and c, it must contain a complete subgraph of order ni whose edges are all colour i. The special case above has c = 2 and n1 = n2 = 3.

binary relation

reflexive

A binary relation is reflexive if

x ▲ x for all x

transitive

A binary relation is transitive if

x ▲ y

and

y ▲ z

,

then

x ▲ z

for all x y z.

preorder

A binary relation is preorder if it is reflexitive and transitive.