One Language to Rule Them All? Which Language to Use for Find Replace (2011)

This is my personal account of a struggle in choosing languages and trying to maintain some concept of efficiency of using one single system instead of mishmash of components.

Or, What Language to Use for Find Replace?

I never really got into bash shell scripting. Sometimes tried but the ratio of power/syntax is intolerable. Knowing perl well pretty much killed any possible incentive left.

In late 1990s, my thought was that i'll just learn perl well and never need to learn other lang or shell for any text processing and sys admin tasks. The thinking is that it'd be efficient in the sense of not having to waste time learning multiple langs for doing the same thing. (not counting job requirement in a company) So i have written a lot perl scripts for find replace and file management stuff and tried to make them as general as possible. But what turns out is that, over the years, for one reason or another, i just learned python, php, then in 2007 elisp. Maybe the love for languages inevitably won over my one-language efficiency obsession. But also, i end up rewrote many of my text processing script in each lang. I guess part of it is exercise when learning a new lang.

But one thing i learned is that for misc text processing scripts, the idea of writing a generic flexible powerful one once for all just doesn't work, because the coverage are too wide and tasks that need to be done at one time are too specific. (and i think this makes sense, because the idea of one language or one generic script for all stem from ideology, not from real world practice. If we look at the real world, it's almost always a disparate mess of components and systems.)

my text processing scripts end up being a mess. There are several versions in different langs. A few are general, but most are basically used once or in a particular year only. (many are branched off from a generic one but customized into specific needs that are used then thrown away). When i need to do some particular task, i found it easier just to write a new one in whatever lang that's currently in my brain than trying to spend time fishing out and revisit old generic scripts.

Concrete Examples

I wrote this general script in 2000, intended to be one-stop for all find replace needs. See: Perl: Find Replace Text in Directory 📜 .

The thinking was that, whenever i need to do some find and replace, i'll just use this script. If i need some feature not there, such as replace only if some condition is met, multip-pair replace, find and report number of occurrences, etc, i'd modify the script so that the specific need becomes a parameter in a general scheme.

In 2005, while i was learning python, i wrote (several) versions in python. e.g. Python: Find Replace Regex in Dir

I did that to learn python. The main reason i started to learn python was that perl at the time does not have robust Unicode support, and i started to need to deal with lots Unicode. (it's also the same time i switched from XEmacs to Emacs for the same reason. 〔see My Experience of Emacs vs XEmacs〕)

The python scripts are not ports of my perl code. The python scripts each does one specific find and replace variation and isn't one generalized single script. It turns out, i have stopped using my generic perl script for find and replace. I didn't need all that features, and when i do need them, i have several other scripts that address a particular need.

What Are the Different Tasks of Find Replace?

Here is some examples of why there's so many variations:

- One for searching utf-8 encoded files.

- One for change Windows/unix line ending.

- One for converting file encoding.

- One for multiple pairs of find replace in one shot.

- One for regex, one for literal text. (it's a pain to always use regex when you just need plain text. Because if you forgot to turn regex off, you get screwed. Also, regex escape is a pain.)

- One for find and report only (as in grep), one for find+replace. (it's a pain to use a generalized find replace script when all you want is report.)

- Do find replace only if particular condition is met. (For example, if the search string is inside a particular HTML tag)

The Emacs Blackhole

Then in 2006, i fell into the emacs lisp hole. In the process, i realized that elisp for text processing is more powerful than perl or python. Not due to lisp the lang, but due to the whole emacs system:

- Emacs Lisp is specifically designed for text processing. It has buffer datatype, which is much more powerful than just regex on string. (think of it as generalized string datatype) See: Emacs Lisp vs Perl for Text Processing (2007).

- Emacs Lisp is interactive. Every text processing script you write can have interactive user interface without much extra code. (For example, find replace on a case-by-case basis, with visual display of results in real-time, one click to jump to location in files, syntax coloring of results.)

- Emacs the system saves you lots of tedious details, such as file encoding, backup, perm preservation. Your script doesn't have to deal with any of these. (in perl, python, you have to, and load lots of libraries for system, file path, etc.)

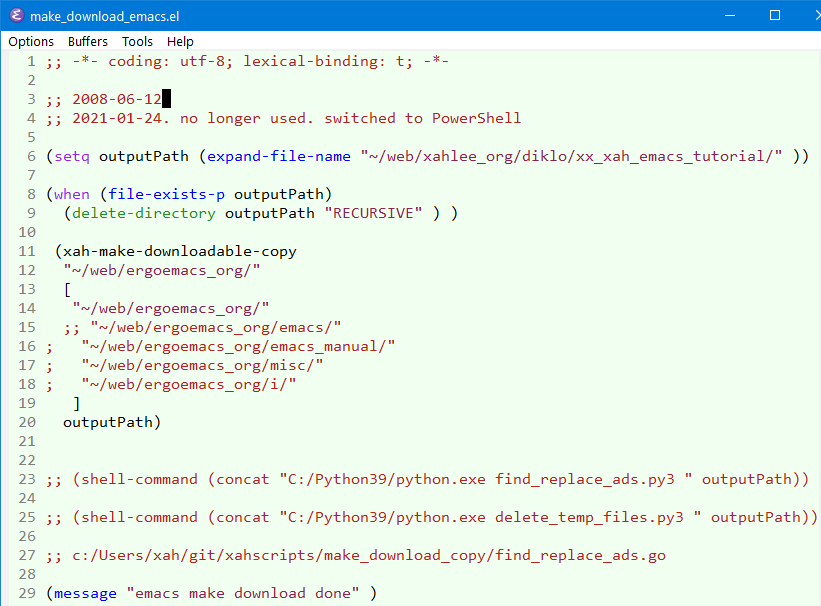

So, almost all my new scripts for text processing are in elisp now. A few of my Perl and Python scripts i still use, but almost everything is now in elisp. Here's some examples:

- Emacs Lisp Multi-Pair Find Replace Applications

- Elisp: Write grep

- Elisp: Text Processing: Generate a Web Links Report

- Creating a Sitemap with Emacs Lisp

Back to Shell Bag?

Sometimes in 2008, i grew a shell script that process weblog using the bunch of unix bag cat grep awk sort uniq. It's about 100 lines.

# 2008-04-16 , 2010-08-09 # Xah Lee ∑ http://xahlee.org/ inputFile="xx"; # filter out bad accesses cat $inputFile | grep '" 200 ' | grep '] "GET ' | grep -v -E "scoutjet\.com|yahoo.com/help/us/ysearch/slurp|google\.com/bot|msn.com/msnbot\.htm|dotnetdotcom\.org|yandex\.com/bots" > x-log_200_GET.txt cat $inputFile | cut -d '"' -f 6 | sort | uniq -c | sort -n -r > x-agents.txt cat x-agents.txt | grep -i -E "bot|crawler|spider|http" > x-bots.txt #---------- # get stumble referrals cat x-log_200_GET.txt | grep '\.html HTTP' | awk '$12 ~ /stumbleupon\.com/ {print $12 , $7}' | sort | sed s/refer.php/url.php/ | uniq -c | sort -n -r > x-referral_stumble.sh # get youporn referrals cat x-log_200_GET.txt | grep '\.html HTTP' | awk '$12 ~ /youporn\.com/ {print $12 , $7}' | sort | uniq -c | sort -n -r > x-referral_youporn.sh # get Wikipedia referrals cat x-log_200_GET.txt | awk '$12 ~ /wikipedia.org/ {print $12}' | sort | uniq -c | sort -n -r > x-referral_Wikipedia.sh # get blog sites referrals cat x-log_200_GET.txt | grep '\.html HTTP' | awk '$12 ~ /delicious\.com|livejournal\.com|multiply\.com|blogspot\.com|reddit\.com|twitter\.com|swik\.net|google\.com\/reader\/view/ {print $12 , $7}' | sort | sed s/refer.php/url.php/ | uniq -c | sort -n -r > x-referral_blog.sh # get all referrals from non-search sites cat x-log_200_GET.txt | grep '\.html HTTP' | awk '$12 !~ /xahlee.org|-|\?|stumbleupon\.com|youporn\.com|wikipedia.org|delicious\.com|livejournal\.com|multiply\.com|blogspot\.com|reddit\.com|twitter\.com|swik\.net|http:\/\/www\.google/ {print $12 , $7}' | sort | uniq -c | sort -n -r > x-referral_nonsearch.sh # get all referrals from search sites cat x-log_200_GET.txt | grep '\.html HTTP' | awk '$12 ~ /\?/ {print $12 , $7}' | grep -v stumbleupon\.com | sort | uniq -c | sort -n -r | head -n 500 > x-referral_search.sh #---------- # most popular page cat x-log_200_GET.txt | grep '\.html HTTP' | awk '{ print $7 }' | sort | uniq -c | sort -n -r > x-pop-site_all.sh # popularity by directory cat x-pop-site_all.sh | grep "/MathGraphicsGallery_dir/" > x-pop-site_math_gallery.sh cat x-pop-site_all.sh | grep "/Periodic_dosage_dir/bangu/" > x-pop-site_bangu.sh cat x-pop-site_all.sh | grep "/Periodic_dosage_dir/lacru/" > x-pop-site_lacru.sh cat x-pop-site_all.sh | grep "/Periodic_dosage_dir/lanci/" > x-pop-site_lanci.sh cat x-pop-site_all.sh | grep "/Periodic_dosage_dir/las_vegas/" > x-pop-site_vegas.sh cat x-pop-site_all.sh | grep "/Periodic_dosage_dir/sanga_pemci/" > x-pop-site_sanga_pemci.sh cat x-pop-site_all.sh | grep "/Periodic_dosage_dir/t1/" > x-pop-site_t1.sh cat x-pop-site_all.sh | grep "/Periodic_dosage_dir/t2/" > x-pop-site_t2.sh cat x-pop-site_all.sh | grep "/SpecialPlaneCurves_dir/" > x-pop-site_curve.sh cat x-pop-site_all.sh | grep "/UnixResource_dir/" > x-pop-site_UnixResource.sh cat x-pop-site_all.sh | grep "/Vocabulary_dir/" > x-pop-site_vocab.sh cat x-pop-site_all.sh | grep "/Wallpaper_dir/" > x-pop-site_wallpaper-group.sh cat x-pop-site_all.sh | grep "/3d/" > x-pop-site_3d.sh cat x-pop-site_all.sh | grep "/dinju/" > x-pop-site_dinju.sh cat x-pop-site_all.sh | grep "/emacs/" > x-pop-site_emacs.sh cat x-pop-site_all.sh | grep "/elisp/" > x-pop-site_elisp.sh cat x-pop-site_all.sh | grep "/java-a-day/" > x-pop-site_java.sh cat x-pop-site_all.sh | grep "/js/" > x-pop-site_javascript.sh cat x-pop-site_all.sh | grep "/p/" > x-pop-site_literature.sh cat x-pop-site_all.sh | grep "/python/" > x-pop-site_perl-python.sh cat x-pop-site_all.sh | grep "/sl/" > x-pop-site_sl.sh cat x-pop-site_all.sh | grep "/surface/" > x-pop-site_surface.sh cat x-pop-site_all.sh | grep "/vofli_bolci/" > x-pop-site_juggling.sh cat x-pop-site_all.sh | grep "/xamsi_calku/" > x-pop-site_xamsi_calku.sh # echo "" > x-pop-site_compare.sh # echo "$(awk '{sum += $1} END {print sum}' x-pop-site_UnixResource.sh) UnixResource" >> x-pop-site_compare.sh # echo "$(awk '{sum += $1} END {print sum}' x-pop-site_3d.sh) 3d" >> x-pop-site_compare.sh # echo "$(awk '{sum += $1} END {print sum}' x-pop-site_curve.sh) curve" >> x-pop-site_compare.sh # echo "$(awk '{sum += $1} END {print sum}' x-pop-site_bangu.sh) bangu" >> x-pop-site_compare.sh # echo "$(awk '{sum += $1} END {print sum}' x-pop-site_dinju.sh) dinju" >> x-pop-site_compare.sh # echo "$(awk '{sum += $1} END {print sum}' x-pop-site_emacs.sh) emacs" >> x-pop-site_compare.sh # echo "$(awk '{sum += $1} END {print sum}' x-pop-site_elisp.sh) elisp" >> x-pop-site_compare.sh # echo "$(awk '{sum += $1} END {print sum}' x-pop-site_java.sh) java" >> x-pop-site_compare.sh # echo "$(awk '{sum += $1} END {print sum}' x-pop-site_javascript.sh) js" >> x-pop-site_compare.sh # echo "$(awk '{sum += $1} END {print sum}' x-pop-site_juggling.sh) juggling" >> x-pop-site_compare.sh # echo "$(awk '{sum += $1} END {print sum}' x-pop-site_lacru.sh) lacru" >> x-pop-site_compare.sh # echo "$(awk '{sum += $1} END {print sum}' x-pop-site_lanci.sh) lanci" >> x-pop-site_compare.sh # echo "$(awk '{sum += $1} END {print sum}' x-pop-site_literature.sh) literature" >> x-pop-site_compare.sh # echo "$(awk '{sum += $1} END {print sum}' x-pop-site_math_gallery.sh) math_gallery" >> x-pop-site_compare.sh # echo "$(awk '{sum += $1} END {print sum}' x-pop-site_perl-python.sh) perl-python" >> x-pop-site_compare.sh # echo "$(awk '{sum += $1} END {print sum}' x-pop-site_sanga_pemci.sh) sanga_pemci" >> x-pop-site_compare.sh # echo "$(awk '{sum += $1} END {print sum}' x-pop-site_sl.sh) sl" >> x-pop-site_compare.sh # echo "$(awk '{sum += $1} END {print sum}' x-pop-site_surface.sh) surface" >> x-pop-site_compare.sh # echo "$(awk '{sum += $1} END {print sum}' x-pop-site_t1.sh) dosage_t1" >> x-pop-site_compare.sh # echo "$(awk '{sum += $1} END {print sum}' x-pop-site_t2.sh) dosage_t2" >> x-pop-site_compare.sh # echo "$(awk '{sum += $1} END {print sum}' x-pop-site_vegas.sh) las vegas" >> x-pop-site_compare.sh # echo "$(awk '{sum += $1} END {print sum}' x-pop-site_vocab.sh) vocab" >> x-pop-site_compare.sh # echo "$(awk '{sum += $1} END {print sum}' x-pop-site_wallpaper-group.sh) wallpaper-group" >> x-pop-site_compare.sh # echo "$(awk '{sum += $1} END {print sum}' x-pop-site_xamsi_calku.sh) xamsi_calku" >> x-pop-site_compare.sh # cat x-pop-site_compare.sh | sort -n -r > x-pop-site_compare.sh #---------- # count JavaView access cat x-log_200_GET.txt | grep -E '_jv_.+ HTTP' | awk '{print $7}' | sort | uniq -c | sort -n -r > x-pop-type_jv.sh # count Geogebra access cat x-log_200_GET.txt | grep '\.ggb HTTP' | awk '{print $7}' | sort | uniq -c | sort -n -r > x-pop-type_ggb.sh # count elisp files access cat x-log_200_GET.txt | grep '\.el HTTP' | awk '{print $7}' | sort | uniq -c | sort -n -r > x-pop-type_el.sh # count fig files access cat x-log_200_GET.txt | grep '\.fig HTTP' | awk '{print $7}' | sort | uniq -c | sort -n -r > x-pop-type_fig.sh # count gsp files access cat x-log_200_GET.txt | grep '\.gsp HTTP' | awk '{print $7}' | sort | uniq -c | sort -n -r > x-pop-type_gsp.sh #---------- # all kml downloads cat x-log_200_GET.txt | awk '$0 ~ /\.kml HTTP/ {print $7}' | sort | uniq -c | sort -n -r > x-pop-type_kml.sh # all jar downloads cat x-log_200_GET.txt | awk '$0 ~ /\.jar HTTP/ {print $7}' | sort | uniq -c | sort -n -r > x-pop-type_jar.sh # all gz downloads cat x-log_200_GET.txt | awk '$0 ~ /\.gz HTTP/ {print $7}' | sort | uniq -c | sort -n -r > x-pop-type_gz_all.sh #---------- # all zip downloads cat x-log_200_GET.txt | awk '$0 ~ /\.zip HTTP/ {print $7}' | sort | uniq -c | sort -n -r > x-pop-type_zip_all.sh #---------- # leechers cat x-log_200_GET.txt | grep -v -i '\.html HTTP' | awk '$12 !~ /xahlee\.org|xahporn\.org|xahlee\.info|xahlee\.blogspot\.com|-/ {print $12, $7}' | sort | uniq -c | sort -n -r > x-leechers.sh

At one time i wondered, why do i have this 100 lines shell script? Isn't it my idea that perl should supplant all shell scripts? And what happened to my elisp love?

I gave it a little thought, and i think the conclusion is that for this task, the shell script is actually more efficient and simpler to write. Possibly if i started with perl for this task and i might end up with a good structured code and not necessarily less efficient… but you know things in life isn't all planned. It began when i just need a few lines of grep to see something in my web log. Then, over the years, added another line, another line, then another, all need-based. If in any of those time i thought “let's scratch this and restart with perl” — that'd be wasting time.

Besides that, i doubt that perl would do a better job for this. With shell tools, each line just do one simple thing with piping. To do it in perl, one'd have to read-in the huge log file then maintain some data structure and try to parse it… too much memory and thinking would involved. If i code perl by emulating the shell code line-by-line, then it makes no sense to switch to perl, since it's the exact same code in different clothing.

Also note, this shell script can't be replaced by elisp, because elisp is not suitable when the file size is large. Emacs has to load entire file into a buffer.

So much for “one language to rule them all”. That is my story.

to shell or not to shell

I realized i wasted tremendous time in past 10 years, writing code in in elisp python golang that should have been done in shell scripts. Because i was thinking towards using 1 great general purpose lang. Shell scripts is truly best for the things it does. It is a language designed for manipulating files, checking status, launch apps, etc.

One concrete example of how shell scripts is better for shell tasks is traverse dir.

In shell, it's one line, unix find or powershell dir.

In python perl elisp golang, it's 5 or 20 lines often involving loading lib, often more than one, such as sys, path.