Elisp vs Perl: Validate File Links

This page shows 2 scripts to validate HTML local file links (i.e. check file existence of local links). One written in perl, one in elisp.

The 2 script's algorithms are not artificially made to be the same, but follow the natural style/idiom for each lang. They do the same job for my need.

Perl

For each file, call “process_file”. That function then calls

get_links($file_full_path)

to get a list of links, then print the link if it leads to a non-existent

file.

The heart of the algorithm is the “get_links” function. It reads the whole file content as one big string, then split the string by the char “<”, then, for each segment of text, it proceed to find a link using regex.

# -*- coding: utf-8 -*- # perl # 2004-09-21, 2012-04-07 # given a dir, check all local links and inline images in the HTML files there. Print a report. # XahLee.org use strict; use Data::Dumper; use File::Find; use File::Basename; my $inDirPath = q{c:/Users/h3/web/xahlee_org/}; # $inDirPath = $ARGV[0]; # should give a full path; else the $File::Find::dir won't give full path. die qq{dir $inDirPath doesn't exist! $!} unless -e $inDirPath; ################################################## # subroutines # get_links($file_full_path) returns a list of values in <a href="…">. Sample elements: q[http://xahlee.org], q[../image.png], q[ab/some.html], q[file.nb], q[mailto:Xah@XahLee.org], q[#reference], q[javascript:f('pop_me.html')] sub get_links ($) { my $full_file_path = $_[0]; my @myLinks = (); open (FF, "<$full_file_path") or die qq[error: can not open $full_file_path $!]; # read each line. Split on char “<”. Then use regex on 「href=…」 or 「src=…」 to get the url. This assumes that a tag 「<…>」 is not broken into more than one line. while (my $fileContent = <FF>) { my @textSegments = (); @textSegments = split(m/</, $fileContent); for my $oneLine (@textSegments) { if ($oneLine =~ m{href\s*=\s*"([^"]+)".*>}i) { push @myLinks, $1; } if ($oneLine =~ m{src\s*=\s*\"([^"]+)".*>}i) { push @myLinks, $1; } } } close FF; return @myLinks; } sub process_file { if ( $File::Find::name =~ m[\.html$] && $File::Find::dir !~ m(/xx) ) { my @myLinks = get_links($File::Find::name); map { s/#.+//; # delete url fragment identifier, eg http://example.com/index.html#a s/%20/ /g; # decode percent encode url s/%27/'/g; if ((!m[^http:|^https:|^mailto:|^irc:|^ftp:|^javascript:]i) && (not -e qq[$File::Find::dir/$_]) ) { print qq[• $File::Find::name $_\n];} } @myLinks; } } find(\&process_file, $inDirPath); print "\nDone checking. (any errors are printed above.)\n";

full updated code at Perl: Validate Local Links

Emacs Lisp

For each file, call “my-process-file”. Then, the file is put into a buffer. Then, it uses regex search, and moving cursor, etc, to make sure that we find links we want to check.

;; -*- coding: utf-8 -*- ;; elisp ;; 2012-02-01 ;; check links of all HTML files in a dir ;; check only local file links in text patterns of the form: ;; < … href="link" …> ;; < … src="link" …> (setq inputDir "~/web/xahlee_org/" ) ; dir should end with a slash (defun my-process-file (fPath) "Process the file at FPATH" (let ( xurl xpath p1 p2 p-current p-mb (checkPathQ nil) ) ;; open file ;; search for a “href=” or “src=” link ;; check if that link points to a existing file ;; if not, report it (when (not (string-match "/xx" fPath)) ; skip file whose name starts with “xx” (with-temp-buffer (insert-file-contents fPath) (while (re-search-forward "\\(?:href\\|src\\)[ \n]*=[ \n]*\"\\([^\"]+?\\)\"" nil t) (setq p-current (point) ) (setq p-mb (match-beginning 0) ) (setq xurl (match-string 1)) (save-excursion (search-backward "<" nil t) (setq p1 (point)) (search-forward ">" nil t) (setq p2 (point)) ) (when (and (< p1 p-mb) (< p-current p2) ) ; the “href="…"” is inside <…> ;; set checkPathQ to true for non-local links and xahlee site, eg http://xahlee.info/ (if (string-match "^http://\\|^https://\\|^mailto:\\|^irc:\\|^ftp:\\|^javascript:" xurl) (when (string-match "^http://xahlee\.org\\|^http://xahlee\.info\\|^http://ergoemacs\.org" xurl) (setq xpath (xahsite-url-to-filepath (replace-regexp-in-string "#.+x" "" xurl))) ; remove trailing jumper url. For example: href="…#…" (setq checkPathQ t) ) (progn (setq xpath (replace-regexp-in-string "%27" "'" (replace-regexp-in-string "#.+x" "" xurl)) ) (setq checkPathQ t) ) ) (when checkPathQ (when (not (file-exists-p (expand-file-name xpath (file-name-directory fPath)))) (princ (format "• %s %s\n" (replace-regexp-in-string "^c:/Users/h3" "~" fPath) xurl) ) ) (setq checkPathQ nil) ) ) ) ) ) ) ) (require 'find-lisp) (let (outputBuffer) (setq outputBuffer "*xah check links output*" ) (with-output-to-temp-buffer outputBuffer (mapc 'my-process-file (find-lisp-find-files inputDir "\\.html$")) (princ "Done deal!") ) )

What is Valid HTML

Note that the HTML files are assumed to be W3C valid (i.e. no missing closing tags or missing “>”). However, my code are not general enough to cover arbitrary valid HTML. SGML based HTML are very complex, and isn't just nested tags, but such HTML are basically never used. The perl and elisp code here work correctly (get all links) for perhaps 99.9% HTML files out there. (symbolic links or other alias mechanisms on file system are not considered.)

Edge Case Examples

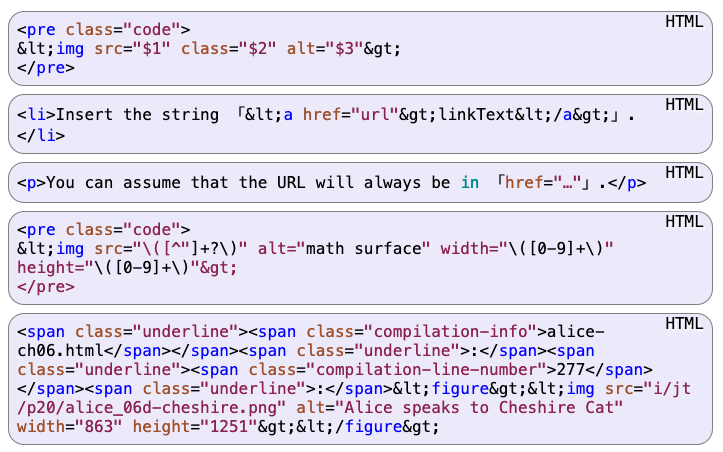

Here's some edge cases. These examples show that you cannot simply use regex to search for the pattern <a href="…" …>. Here's a most basic example:

<a href="math.html" title="x > y">math</a>

Note that the above is actually valid HTML according to W3C's validator. Also, note that pages passing W3C validator are not necessarily valid by W3C's HTML spec. [see W3C HTML Validator Invalid]

One cannot simply use regex to search for pattern

<a href="…" …>, and this is especially so because some HTML pages

contains sample HTML code for teaching HTML, and

ohters are

programing tutorials containing code example of using regex to parse HTML.

So, the HTML is sometimes HTML embedded in HTML,

or

HTML code in regex in python code on a HTML page.

The following shows that patterns such as

href="…" or

src="…" are not necessarily HTML links.

Perl vs Emacs Lisp

One thing interesting is to compare the approaches in perl and emacs lisp.

For our case, regex is not powerful enough to deal with the problem by itself, due to the nested nature of HTML.

This is why, in my perl code, i split the file by “<” into segments first, then, use regex to deal with now the non-nested segment.

This will break if you have <a title="x < href=z" href="math.html">math</a>.

This cannot be worked around unless you really start to write a real parser.

The elisp here is more powerful, not because of any lisp features, but because emacs's buffer datatype. You can think of it as a glorified string datatype, that you can move a cursor back and forth, or use regex to search forward or backward, or save cursor positions (index) and grab parts of text for further analysis.

also, might checkout my perl tutorial Perl Tutorial